Dear all,

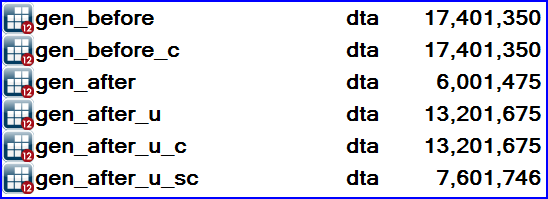

I am working with a large dataset in Stata 13 and I am interested in storing the data in the most compact form. The dataset has a number of string variables of various lengths (currently all of them fit the older 244 limit). Converting some of them to strLs seems to reduce the file size, but I am unsure if converting all of them would be beneficial. All further processing will use these existing variables in read-only mode, so their lengths and content will not change.

I am interested in empirical guidance on the tradeoff between strL and strF in Stata 13. For example, it follows from the dta-117 specification, that employing strL will only increase the file size for str8. However it is not clear for, say, str20? I do anticipate a large number of duplicates in these strings, but mostly for the short strings. Longer ones are likely to be unique sequences.

Should I look for my own strategy for data storage or should I trust that compress finds the best storage types? For example I don't see it changing types from strF to strL, and I can't come up with an explanation why? It would imho be beneficial in the case of long datasets with repetitive long content, or with a few long strings and mostly short ones; and it inspires me to write supcompress to achieve better storage than compress does. Should I be aware of something not obvious?

I am also interested in finding out, what exactly r(width) is for datasets containing strLs? (given that the length of a strL is not fixed).

I am also interested in finding out, how to capture programmatically the size of the dataset in memory (neither of the memory or describe commands that report this size saves this value)

Thank you, Sergiy Radyakin

I am working with a large dataset in Stata 13 and I am interested in storing the data in the most compact form. The dataset has a number of string variables of various lengths (currently all of them fit the older 244 limit). Converting some of them to strLs seems to reduce the file size, but I am unsure if converting all of them would be beneficial. All further processing will use these existing variables in read-only mode, so their lengths and content will not change.

I am interested in empirical guidance on the tradeoff between strL and strF in Stata 13. For example, it follows from the dta-117 specification, that employing strL will only increase the file size for str8. However it is not clear for, say, str20? I do anticipate a large number of duplicates in these strings, but mostly for the short strings. Longer ones are likely to be unique sequences.

Should I look for my own strategy for data storage or should I trust that compress finds the best storage types? For example I don't see it changing types from strF to strL, and I can't come up with an explanation why? It would imho be beneficial in the case of long datasets with repetitive long content, or with a few long strings and mostly short ones; and it inspires me to write supcompress to achieve better storage than compress does. Should I be aware of something not obvious?

I am also interested in finding out, what exactly r(width) is for datasets containing strLs? (given that the length of a strL is not fixed).

I am also interested in finding out, how to capture programmatically the size of the dataset in memory (neither of the memory or describe commands that report this size saves this value)

Thank you, Sergiy Radyakin

Comment